Can I Use Cloudwatch to Track File Downloads S3

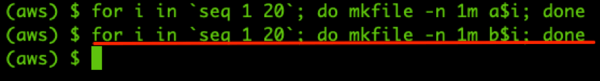

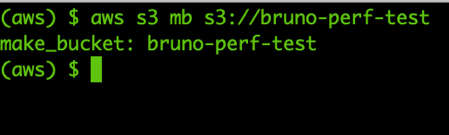

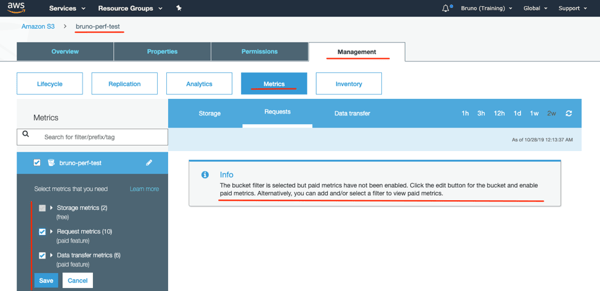

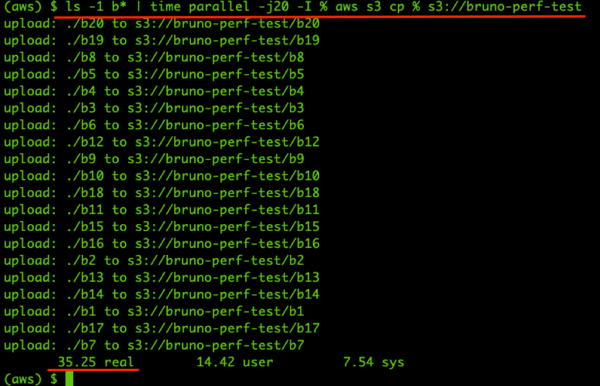

For anyone using Amazon Web Services, the Amazon S3 service should be familiar. After all, it was the first commercial service available in AWS. This pioneering service has changed the way we use storage by providing near unlimited cloud storage at a low cost. Even though Amazon S3 is a fully managed service that does not require much operational effort, there can be unexpected performance issues depending on your usage pattern and architecture. The typical issues often result from a poor design pattern. What can you do to optimize Amazon S3 performance and have a better architectural design pattern? Well, as it turns out, AWS gives you a few tips and tricks. Caching content that is frequently accessed and implementing timeout-retries in your applications are both good advice that can often be applied to non-AWS systems as well, and are seen as best practices in software architecture. Other recommendations are geared more towards S3 and are definitely worth exploring. For example, scaling horizontally and being able to parallelize requests can make a huge difference, as we will see further below. Also, if you have geographically disparate data transfers, you should consider enabling Amazon S3 Transfer Acceleration to minimize the latency. It can be hard for someone without much cloud expertise or as an AWS newcomer to identify issues with Amazon S3. NetApp® Cloud Volumes ONTAP users may be starting out with Amazon S3 and looking for a way to monitor the service. In this post we'll take a look at what AWS offers to keep on top of your object storage deployment with Amazon S3. By adopting these design patterns and following these architecture good practices you will be able to take full advantage of the service. When thinking about monitoring in the AWS ecosystem, Amazon CloudWatch is the service that first comes to mind. After all, it is the de facto standard for monitoring on AWS and provides several functionalities such as metrics (standard and custom), log aggregation, and alerts. But Amazon S3 is not your typical service: it doesn't really have logs, in the sense that the logging of the applications using it is done outside of it (either via AWS CloudWatch or not). In fact, the only logs directly related with AWS S3 usage are from Amazon CloudTrail (if enabled) which tells us who did what and when. Therefore, to monitor performance we need to rely heavily on AWS CloudWatch metrics. By default, every AWS S3 bucket has the Storage metrics enabled. It is possible (for a fee), to enable additional metrics related to Requests and Data Transfer. To monitor the performance of S3 and leverage the available metrics, you can use the built-in dashboards inside the S3 Management console. On the other hand, if you want more control over those AWS metrics and advanced capabilities, you can use AWS CloudWatch to create your own Dashboards or configure Alarms. This flexibility and tight AWS service integration enables you to automate and extend the monitoring process. As an example, with the metrics stored in CloudWatch, you can use AWS Lambda to create business logic to act upon them and notify people via Amazon Simple Notification Service (SNS). Another AWS automation scenario could be to act based on the CloudTrail log events, as an example, your Lambda business logic could be triggered when a user does certain action in your AWS S3 bucket, making this a versatile and powerful tool. Testing AWS S3 Performance is not a trivial task. There are different ways and techniques that can influence the performance while using AWS S3. We are going to give a practical example of a good design pattern, such as doing request parallelization to improve performance. 1. Using the command: for i in `seq 1 20`; do mkfile -n 1m a$i; done we are able to generate 20 unique files of 1 MB each named a[1..20]. With the same process, we also generate b[1..20]. This enabled us to generate two datasets of 20 MB each to a grand total of 40 MB. Generate test files: a[1-20] and, b[1-20]. 2. We continue by making a new S3 bucket that can be used as a destination for the upload of our test files. We use the command aws s3 mb s3://<bucket_name>. The bucket name needs to be globally unique within AWS. 3. We can finish the preparation process by enabling additional metrics such as Request and Data Transfer in the S3 bucket configuration. When enabling these metrics, keep in mind that it might take several minutes until they appear visible in the dashboard. 1. We start our test by uploading half of our dataset (i.e., 20 MB) to the S3 bucket. We want to be able to test the performance of making single requests putting data into the S3 bucket. To achieve that we will use the time for i in `ls -1 a*`; do aws s3 cp $i s3://bruno-perf-test; done command. This will iterate over each file and do single put requests to AWS S3. As you can see, by doing single requests, it took a bit over four minutes to upload each file. To be able to test the performance of parallelizing multiple requests, we will use the GNU parallel tool. If you are using OSX, you can install it via homebrew by using the command brew install parallel. 2. In the terminal, we initiate the upload of the files b[1..20] in parallel (20 jobs) using the command ls -1 b* | time parallel -j20 -I % aws s3 cp % s3://bruno-perf-test The two tests we just conducted will also be visible in the AWS S3 Metrics panel and in AWS CloudWatch. This is how to view them: 1. In AWS S3, go to the bucket configuration and under the Management section you will find the Metrics panel. 2. Explore the different dashboards related with Storage, Requests, and Data Transfer. Select the 1h in the time window (last hour) for a more comprehensive visualization. 3. By clicking on "View on CloudWatch" you will be able to view those metrics in CloudWatch and build your own dashboards. This also enables you to properly monitor the performance of AWS S3, by leveraging CloudWatch-native capabilities (e.g. dashboards, metric aggregation, alerting, etc.). Understanding how to monitor your AWS S3 buckets and making your architecture more efficient for a greater S3 performance is incredibly important when designing a cloud-native system. It directly translates into lower costs, and increased speed and performance gains. Now that we have demonstrated the big difference that parallelization can make for higher throughput while putting data in Amazon S3, you can take that into account when designing your system. As an example, instead of using a single EC2 instance to upload data to S3, you can horizontally scale into multiple instances for a performance gain. If you are concerned about storage efficiency and ensuring that your data is protected and highly available, you might also be interested in NetApp Cloud Volumes ONTAP for AWS. Available for also for Azure and Google Cloud, Cloud Volumes ONTAP gives users data management features that aren't available in the public cloud, including space- and cost efficient storage snapshots and instant, zero-capacity data cloning. S3 Monitoring Options in the AWS Ecosystem

Testing and Monitoring AWS S3 Performance in Practice

Make an S3 bucket to test and monitor the performance.

Make an S3 bucket to test and monitor the performance.  Enable the Request and Data transfer metrics in the AWS S3 bucket.

Enable the Request and Data transfer metrics in the AWS S3 bucket.  Upload the a[1-20] test files to AWS S3 bucket.

Upload the a[1-20] test files to AWS S3 bucket. Multiple Requests (Parallelization) Putting Data into an S3 Bucket

Upload the b[1-20] test files to AWS S3 bucket in parallel.

Upload the b[1-20] test files to AWS S3 bucket in parallel.

You should notice an immediate and visible performance increase. By parallelizing the upload requests, we are able to upload the dataset in 35 seconds.

Visualize S3 Metrics as Part of the AWS Monitoring Services  Visualize the BytesUploaded metrics graph in the AWS S3 Metrics.

Visualize the BytesUploaded metrics graph in the AWS S3 Metrics.  Visualize the BytesUploaded metric graph in the AWS CloudWatch

Visualize the BytesUploaded metric graph in the AWS CloudWatch Conclusion

Can I Use Cloudwatch to Track File Downloads S3

Source: https://cloud.netapp.com/blog/aws-s3-performance-tuning-and-monitoring

0 Response to "Can I Use Cloudwatch to Track File Downloads S3"

Publicar un comentario